A/B Testing vs Split Testing: When & How to Use Each

A comprehensive guide for marketers, CRO specialists, and growth teams

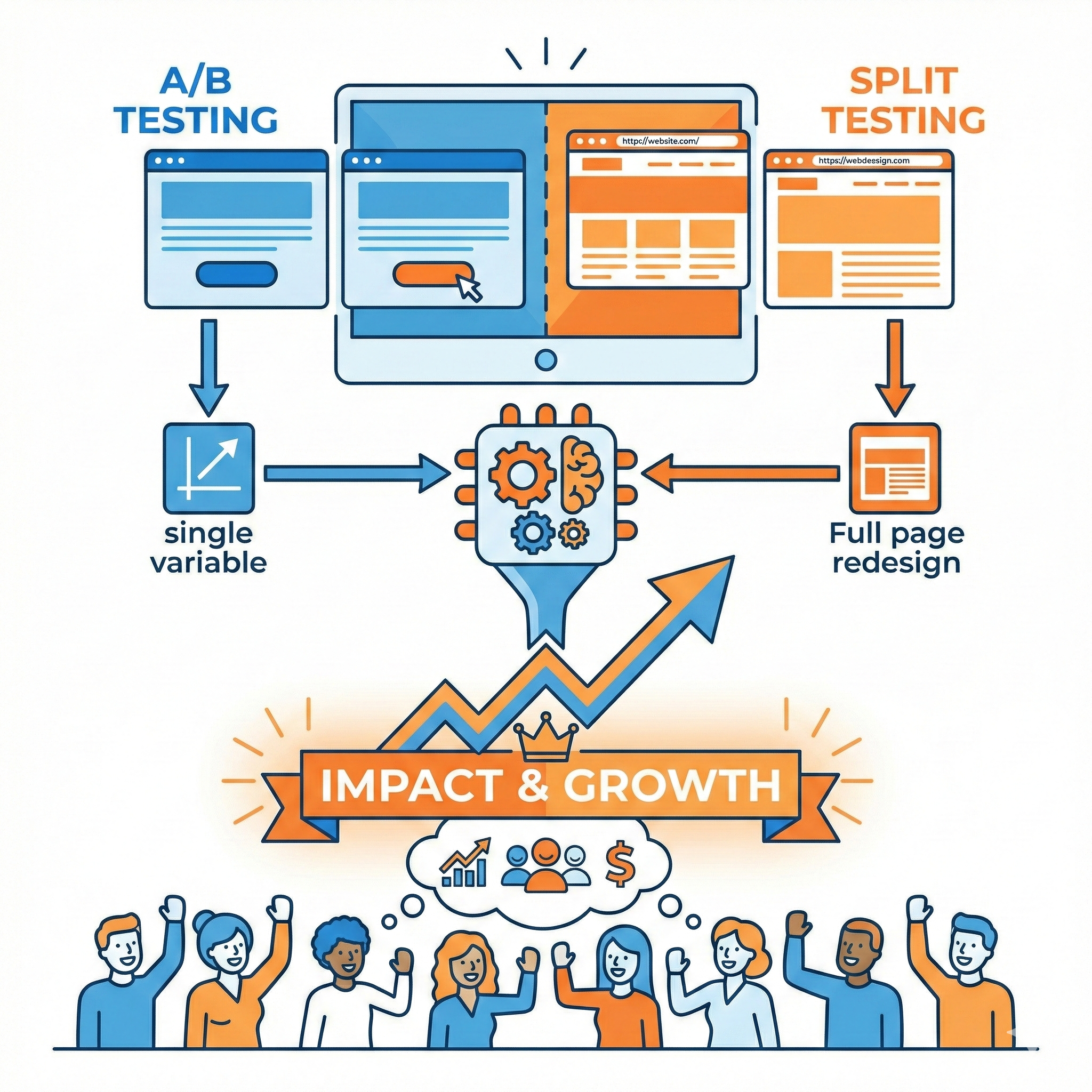

Optimization is no longer optional in digital marketing. Whether you run paid ads, landing pages, SaaS funnels, or eCommerce stores, testing is the foundation of improving performance. However, one of the most misunderstood topics in Conversion Rate Optimization (CRO) is the difference between A/B testing and split testing.

Many marketers use the terms interchangeably — but they are not always the same. Understanding the difference helps you choose the right method, avoid invalid results, and maximize ROI.

This in-depth guide explains:

What A/B testing is

What split testing is

Key differences between them

When to use each method

Step-by-step implementation

Statistical significance & traffic requirements

Tools & best practices

Common mistakes to avoid

Real-world examples

Advanced optimization strategies

Understanding Testing in Conversion Optimization

Testing allows marketers to:

✔ Improve conversion rates

✔ Reduce acquisition costs

✔ Increase engagement & retention

✔ Improve UX and customer journey

✔ Make data-driven decisions

✔ Scale winning strategies

Instead of guessing what works, testing lets users decide.

What is A/B Testing?

Definition

A/B testing (also called bucket testing) compares two versions of a single variable to determine which performs better.

Visitors are randomly shown Version A or Version B, and performance metrics are measured.

Example

You test:

Version A → Blue CTA button

Version B → Orange CTA button

Everything else remains identical.

How A/B Testing Works

Identify a variable to test

Create Version A (control)

Create Version B (variation)

Split traffic randomly

Measure performance

Determine statistical significance

Implement winner

What Can You Test with A/B Testing?

1. Headlines

Emotional vs direct

Benefit-driven vs curiosity-based

2. Call-to-Action (CTA)

Color

Placement

Text (“Buy Now” vs “Get Started”)

3. Images

Product vs lifestyle imagery

Human faces vs graphics

4. Form Fields

3 fields vs 6 fields

Email first vs phone first

5. Pricing Display

Monthly vs yearly emphasis

Discount messaging

6. Email Subject Lines

Personalized vs generic

Urgency vs curiosity

When A/B Testing Works Best

Use A/B testing when:

✅ You want to test one specific change

✅ Traffic is moderate or limited

✅ You want quick insights

✅ You are optimizing micro-elements

✅ You need clear cause-and-effect results

Advantages of A/B Testing

✔ Easy to implement

✔ Clear results

✔ Low risk

✔ Ideal for continuous optimization

✔ Requires less traffic

✔ Precise insights

Limitations

✖ Tests only one change at a time

✖ Can be slow for large improvements

✖ May miss interaction effects

What is Split Testing?

Definition

Split testing compares two completely different versions of a page, design, or experience.

Instead of changing one element, you test entire variations.

Example

Version A → Long-form landing page

Version B → Minimalist short page

OR

Version A → Traditional layout

Version B → Video-first design

How Split Testing Works

Create two distinct page versions

Host on separate URLs or variants

Divide traffic between versions

Track performance metrics

Analyze conversion differences

What Can You Test with Split Testing?

1. Landing Page Designs

Long-form vs short-form

Single column vs multi-column

2. Funnel Structure

One-step checkout vs multi-step checkout

Lead magnet vs direct sale

3. Page Layout

Video hero vs static image

Minimal design vs detailed content

4. Messaging Strategy

Emotional storytelling vs logical benefits

Problem-focused vs aspiration-focused

5. Navigation Experience

With navigation vs distraction-free

Scroll page vs multi-page journey

When Split Testing Works Best

Use split testing when:

✅ You want to test major design differences

✅ You are redesigning a page

✅ You want to compare UX strategies

✅ You suspect layout impacts conversion

✅ You are launching a new funnel approach

Advantages of Split Testing

✔ Tests major improvements

✔ Reveals user experience preferences

✔ Faster large-scale insights

✔ Useful for redesign validation

Limitations

✖ Requires more traffic

✖ Harder to identify exact cause of improvement

✖ More development effort

✖ Higher risk of performance drop

A/B Testing vs Split Testing: Key Differences

| Factor | A/B Testing | Split Testing |

|---|---|---|

| Scope | Single element | Entire design/page |

| Complexity | Low | Medium to high |

| Traffic needed | Low–moderate | Moderate–high |

| Insight type | Micro optimization | Macro experience |

| Implementation | Simple | More technical |

| Risk | Low | Medium |

| Use case | Continuous improvement | Redesign & strategy testing |

| Speed of insight | Gradual | Faster for major changes |

| Root cause clarity | Clear | Less precise |

When to Use A/B Testing vs Split Testing

Use A/B Testing When:

✔ Optimizing landing page elements

✔ Improving CTR in ads

✔ Increasing email open rates

✔ Reducing form friction

✔ Enhancing button performance

✔ Testing micro-copy

Use Split Testing When:

✔ Launching a new website design

✔ Comparing funnel strategies

✔ Testing conversion architecture

✔ Validating UX redesign

✔ Evaluating messaging approach

Step-by-Step Process for Effective Testing

Step 1: Define Objective

Examples:

Increase signups by 20%

Improve checkout completion

Reduce bounce rate

Step 2: Identify KPI Metrics

Primary metrics:

Conversion rate

Revenue per visitor

CPA

CTR

Secondary metrics:

Bounce rate

Time on page

Scroll depth

Step 3: Develop Hypothesis

Weak hypothesis:

“Changing color might improve conversions.”

Strong hypothesis:

“Changing CTA color to orange will increase conversions because it creates visual contrast and draws attention.”

Step 4: Calculate Sample Size

Avoid stopping tests early. Statistical significance ensures reliable results.

Key factors:

Traffic volume

Baseline conversion rate

Minimum detectable effect

Confidence level (usually 95%)

Step 5: Run the Test

Best practices:

✔ Random traffic distribution

✔ Run for full business cycles

✔ Avoid mid-test changes

✔ Test one hypothesis at a time

Step 6: Analyze Results

Evaluate:

Conversion lift

Statistical significance

Revenue impact

User behavior changes

Step 7: Implement & Iterate

Optimization is continuous.

Test → Learn → Improve → Repeat

Traffic Requirements & Statistical Significance

Why Traffic Matters

Low traffic can produce misleading results.

General Guidelines

A/B Testing

Minimum: 1,000 conversions per variation ideal

Smaller sites can test longer

Split Testing

Requires higher traffic due to larger differences

Recommended for high-volume pages

Statistical Confidence

Aim for:

✔ 95% confidence level

✔ Minimum 2 full business cycles

✔ Avoid weekend-only testing

Tools for A/B and Split Testing

Website & Landing Page Testing

Google Optimize alternatives (VWO, Convert)

Optimizely

VWO

Unbounce

Instapage

Email Testing

Mailchimp

HubSpot

ActiveCampaign

Ad Testing

Meta Ads Experiments

Google Ads Experments

Heatmaps & Behavior Tools

Hotjar

Microsoft Clarity

Crazy Egg

Real-World Examples

Example 1: CTA Optimization (A/B Test)

A SaaS company tested:

A: “Start Free Trial”

B: “Get Started Free”

Result: 18% conversion increase.

Example 2: Landing Page Redesign (Split Test)

An eCommerce brand tested:

A: Traditional product page

B: Storytelling + video-first page

Result: 32% higher conversions.

Example 3: Checkout Flow (Split Test)

One-page checkout

Multi-step checkout

Result: Multi-step improved completion rate.

Common Testing Mistakes to Avoid

1. Testing Too Many Variables

Leads to unclear results.

2. Ending Tests Early

False winners are common.

3. Ignoring Statistical Significance

Small samples mislead decisions.

4. Testing Without Hypothesis

Leads to random experimentation.

5. Not Segmenting Results

Different audiences behave differently.

6. Running Tests During Traffic Spikes

Seasonality can skew results.

7. Copying Competitor Changes Blindly

What works for them may not work for you.

Advanced Optimization Strategies

Multivariate Testing

Tests multiple variables simultaneously.

Best for:

High traffic sites

Complex optimization

Sequential Testing

Continuous testing cycles to refine results.

Personalization Testing

Show variations based on:

device

location

behavior

traffic source

AI-Driven Testing

Modern tools auto-optimize variations using machine learning.

A/B Testing & Split Testing in Performance Marketing

As a digital marketer, testing impacts:

Paid Ads

creatives

copy

landing pages

SEO & CRO

content layout

CTA placement

engagement elements

Email Funnels

subject lines

timing

personalization

Conversion Funnels

checkout flow

lead forms

upsell structure

How to Decide Which Testing Method to Use

Ask yourself:

✔ Am I testing a small element? → A/B test

✔ Am I testing the entire experience? → Split test

✔ Do I want micro improvements? → A/B test

✔ Am I redesigning strategy? → Split test

✔ Do I have low traffic? → A/B test

✔ Do I want big conversion jumps? → Split test

Final Thoughts

Both A/B testing and split testing are powerful tools in a growth marketer’s toolkit. The key is understanding when to use each method and how to interpret results correctly.

Remember:

✔ A/B testing = micro improvements

✔ Split testing = macro experience comparison

✔ Data beats assumptions

✔ Continuous testing drives growth

✔ Optimization is an ongoing process

Organizations that build a culture of experimentation consistently outperform competitors — not because they guess better, but because they learn faster.

Author